For this project, I used Python to attempt to scrape job listings from three popular professional associations' websites:

Society of American Archivists (SAA)

American Library Association (ALA)

Association for Information Science and Technology (ASIS&T)

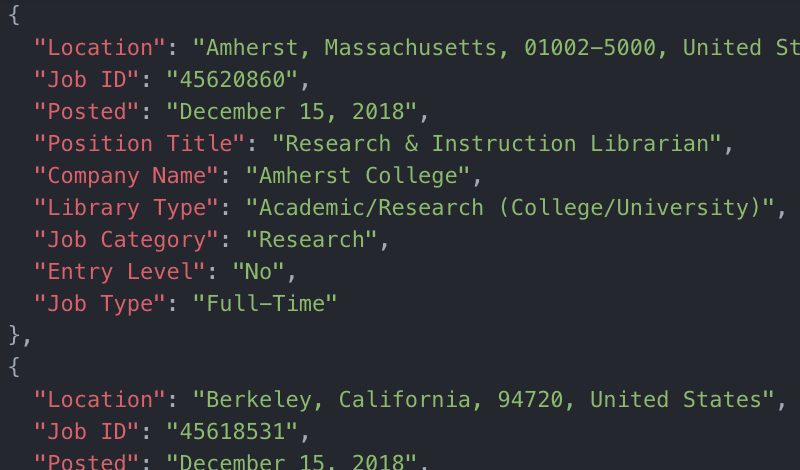

I first scraped the websites for links to detailed job postings and stored the URLs in a JSON file. Then I scraped each detailed job page (with different code to suit each websites' format), and saved this data in a JSON file. I successfully scraped the ASIS&T and ALA websites, but couldn't scrape the SAA website, so my dataset only includes jobs from the last two professional associations. Lastly, I compiled the ASIS&T and ALA jobs into one JSON file (master_job_list.json).

For this project I used four modules:

Requests (An HTTP library that provides a shortcut for making HTML requests) Beautiful Soup (Helps with searching and pulling data from websites structured with HTML or XML) JSON (Used to create and edit .json file types) Time (Used to add time between executions of the code)