A Study of Google Vision, and Amazon Rekognition image Classification for the MET Highlights

By: Seth Crider

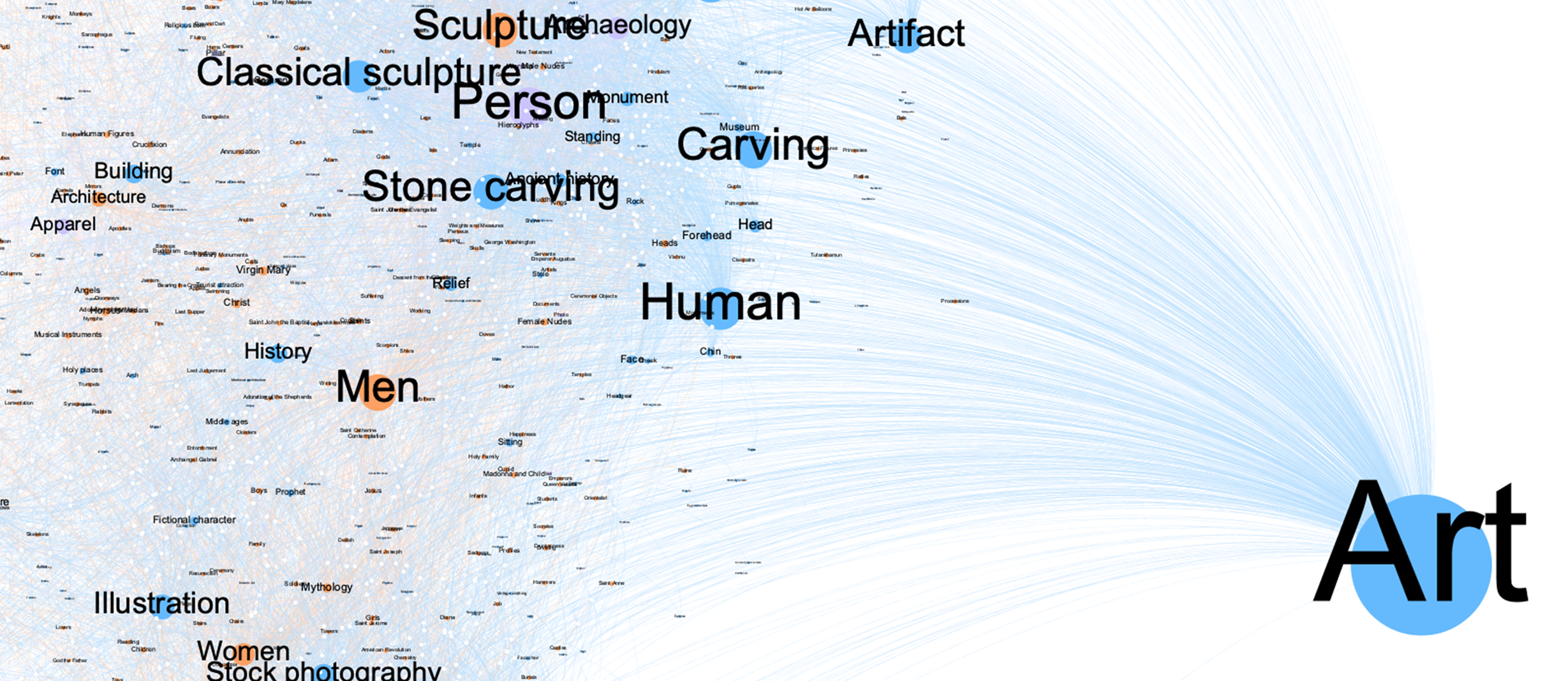

A visual, and quantitative analysis of Google Vision, Amazon Rekognition, and Human generated tags for the collection highlights of the Metropolitan Musuem of New York.

With artificial intelligences’ increasing influence over the way we search for and interpret content in digital domains it can be difficult to dissect where the qualities of labels and associations are coming from, and how algorithms are factoring into our engagement, and understanding. As cultural institutions consider adopting more of these ‘invisible’ strategies to organize and facilitate their digital and physical collections– I thought it would be interesting to dissect and navigate some of these strategies for myself. I hope the resulting visualizations and analysis will encourage thoughtful exploration of the collection’s existing organization by humans, as well as interest in the performance of image classification models as a tool to diversify and broaden the scope of tags.