This is my final project for INFO_664 Programming for Cultural Heritage, and was undertaken as part of an ongoing project of The New School’s Libraries, Collections, and Academic Services department, where I work as a Library Technology Assistant, to redesign our website. As part of this project, we worked with Institutional Research to conduct a survey of the students and faculty at The New School about what they thought of our current website, and how they use library websites in general. The survey was live on Qualtrics for six weeks, from September 29, 2021 until November 11, 2021, and was sent to 14,817 people via email; 12,433 students and 2,384 faculty. Though data was recorded for 1,265 surveys, only 826 of these were finished, and only these complete results are being included, giving us an overall response rate of 5.6%; 17.7% for faculty (who submitted 422 responses) and 3.2% for students (who submitted 404).

Once the survey was closed, I exported the results as a CSV and stripped the data of all identifying information. I also renamed some of the columns for clarity and to streamline the coding process later. This master file was named SurveyData.csv, and is not included here for privacy reasons. The DatasetParser.py script creates separate csvs from this file for faculty and student responses, then further splits the student responses out into Undergraduate, Graduate, and Continuing Education students. Though the code in this project focuses on the overall results with some investigation into the differences in faculty and overall student responses, further exploration will be done in the future on the differences in responses between different student types.

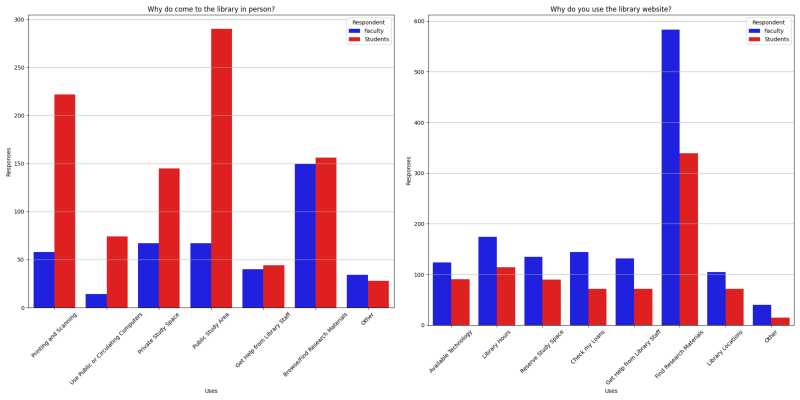

The rest of the scripts in this project focus on different aspects of the data, which are further explained in the “How to Use this Code” section of this project’s README file. Since most of the exported data was in text form, and many of the survey questions utilized a “check all that apply” approach, I used For loops to count specific responses to different questions. Using pandas, I then formatted this data for plotting with Seaborn and MatPlotLib. I also worked closely with my supervisor, Assistant Director for Library Systems Joshua Dull, to do some text analysis on the short answer questions in the survey. This involved using Natural Language Toolkit to filter these responses using stopwords and other extraneous words I discovered as I worked with the data. These filtered responses were then analyzed for the most commonly occurring words in each question, as well as the most commonly occurring collocated words, which give us a better sense of the context in which these words are used. In addition to plotting the most commonly used words with seaborn, I also used the Wordcloud module to generate word clouds for these questions.

This data and the charts it generated, which are included in the BIOS, will inform the next stages in our website design process, helping us determine what our users want us to prioritize and what changes they would like to see.

Special thanks to The New School’s Institutional Research office, Joshua Dull, and Allen Jones.